UPDATE 2: An angry comment was left on this article where the writer seems to think that I’m against people using their phones to take video. While it is absolutely true that a phone is never going to be a great substitute for a proper camera or camcorder, that was not my intention. I think that people should make videos with whatever they have access to, and if that’s a phone, then that’s great! What I’m trying to do here is push people away from paid products that don’t improve their video while claiming that they do. I have a real disgust for misleading newbies and wasting their time, and things like log gamma on 8-bit cameras make newbies think they’re the reason their footage is bad rather than the inappropriate choice to use log gamma. I don’t want to discourage anyone from filmmaking and videography. This is a guide to navigating a minefield of snake oil that’ll gladly take your money and run, not a detailed document made to hate on cell phone videographers. I also made a follow-up video about the comment and my intentions.

UPDATE: There is now a short video that scratches the surface of what’s explained here if you don’t feel like reading everything below. If you’re interested, give it a watch: “No, This Doesn’t Look Filmic – Shooting log, flat, and LUTs all suck”

Part of being a professional in any field is understanding where mistakes are easy to make and what little details make a big difference in the end result. If you’ve skimmed this blog, you already know that I’m a big obnoxious noise-maker when it comes to shooting down the bad knowledge of “shooting flat” or “shooting log” on most cameras. The reason is simple: basic math and basic understanding of how color is stored says it’s a bad idea. Unfortunately, there is a huge body of YouTube video work out there filled with bad advice. These tend to use phrases like “the film look” and “more dynamic range” and “cinematic shooting” and “make your video look filmic.” The vast majority of these advice videos are poor, and because they’re doing a video to show off how great of an idea it is, the demonstrations often look poor too.

I ran into such a video recently and dropped the usual short nugget about not shooting flat or log on 8-bit cameras and how professionals don’t do that. The person who posted the video responded to my comment and it provided a wonderful opportunity to expound on the subject further. This starts with the video description and each successive comment in the chain. This post’s secondary purpose is to archive what was said. I hope you learn from it, in any case.

The following frame grabs are from the video. Though they will have suffered some degradation from YouTube compression and can’t be viewed as equivalent to what you’d get straight out of the camera, they are DEFINITELY what you can expect “FILMIC PRO LogV2” iPhone footage to look like after it’s been uploaded to YouTube!

The Conversation Begins

Description: “Whoa. FiLMiC Pro LogV2 is here! We’ve been testing it for a few weeks, and…It’s pretty great. Up to 12 stops of dynamic range on the latest iPhone XS and new higher bit rates nearing 140Mbps. It will even breathe new life into your older iPhones (like iPhone SE and 6S). Watch the video to learn more!”

Me: Or just shoot without log nonsense because it doesn’t play nice with 8-bit output files, and get the image right in-camera. You know, like an actual videographer that actually knows how to do video work would do.

Video poster: If you have a log option then you can’t match that in camera. The DR just isn’t there. So shooters that know what they’re doing use it. This log was created to work in 8bit very similar to how Canon Log was designed for 8bit video. They both work great and actually have many of the characteristics of 10bit. For example, the blue sky holds perfectly in grading – no banding that often happens with other 8bit log footage or just 8bit footage in general. You should give it a try.

Me: Dynamic range isn’t that important, especially when we’re talking about a maximum of half a stop obtained by sacrificing color difference information, introducing quantization errors in the corrected footage, and further losing subtle color gradients to macroblock losses in the H.264 AVC compression algorithm. I have run multiple tests on footage shot with a variety of supposedly better picture profiles on 8-bit footage from neutral to “flat” to log and the flat/log footage is always worse: it breaks faster, it has clear loss of find detail even before any grading is done which is even worse after correction, and the dynamic range increase is not enough to noticeably improve the overall look of the footage.

I’m concerned that you said it’s similar to 10-bit footage. There is a fundamental misunderstanding of what 10-bit depth does demonstrated in that statement. It’s a matter of precision. There is no benefit to 10-bit footage that is not being graded or is only going to be minimally graded because the quantization errors introduced across small changes are so small that they result in no visible side effects. When the stored color curves are significantly different from the desired color curves, there are much larger quantization errors introduced in the push back to normal colors and these are responsible for the ugly color issues (especially the plastic-looking skin tones) in corrected flat/log 8-bit footage. 10-bit precision eliminates these quantization errors because they store (relative to 8-bit color depth) a fractional component with 2^-2 precision that causes the curve change calculations to make much better quantization (rounding) decisions even if the “push” constitutes two full stops worth of difference.

You can get away with weird color profiles for such footage due to the added precision, but 8-bit color is already truncated to the same precision as the destination color depth, so further rounding operations during correction work is almost exactly the same as if the 10-bit rounding is simply floor rounded (truncated) also. Multiple operations can compound the problem further. Look up how a number like 1.1 is stored in IEEE 754 floating-point and why the historic FDIV bug in the first-gen Pentium CPUs was such a big deal for a better understanding of the issue of numeric precision problems in computing. The same principles affect log-to-standard correction of 8-bit footage.

I get a response and I’m not impressed

Video poster: Thanks for the detailed analysis! For most DPs dynamic range (including highlight roll off) and color science are the most important things. That’s really the primary reason the ARRI Alexa has been the king of movies & TV. Even before they had a 4K camera. Now here we’re obviously not talking about that kind of performance or quality, but the new LogV2 increases the DR significantly – 2 1/2 stops equivalent on an XS Max. 2 stops on most other devices. It also has better highlight roll off. So these things alone make it worth using and will give footage a more cinematic feel (something we almost always want).

Regarding 10bit that was stated as “characteristics” of it, which is true in our experience with the example mentioned. It’s obviously not 4:2:2 10bit color space, but like Canon Log which was specifically designed for 8bit video, this new LogV2 performs exceedingly well in this environment. Very low noise and compression artifacts also. Would say the new higher bit rates help here, too

We don’t pretend to understand exactly how all this is done now with computational imaging etc. as we’re filmmakers not video engineers or app developers. But the results speak for themselves.

The best part is this is all just getting started. Can’t wait to see where computational imaging in photography and video can go.

I can respect the cordial reply and I can definitely get behind how exciting of an era this is for people who want to make great video. The tools and education have never been more accessible. None of that has anything to do with my main assertion: flat/log footage on 8-bit camera gear is always a bad choice. As explained in my previous comment, mathematics agrees with me: you can’t magically fit >8 bits of data in to 8 bits, then stretch that compressed data back out (using curves to change one set of color curves to match another) and have it still retain 8 bits of precision after that set of calculations is applied and rounded to still fit in an 8-bit space.

Let me now argue against the points made directly here, and then I’ll post my response comment. I’m skipping most of the post because “has the characteristics of 10-bit” is total bullshit; simple math says that a 256-range color channel can’t represent anything close to what a 1024-range color channel can represent, so that entire line of reasoning is off the table. The note about importance of highlight roll-off is correct, but the ballet images earlier in this article demonstrate yucky highlight roll-off, so I’m not sure where the knowledge ends and the marketing blindness begins on that one. Chroma subsampling isn’t implied to be 4:2:2 by them, so that’s an easily dismissed mention. “Very low noise and artifacts” makes me seriously question if they’ve ever looked at how their footage falls apart, even once! As for not being a video engineer or app developer, neither am I. I just happen to have done more than my fair share of research.

Proving them wrong using their own footage.

“For most DPs dynamic range (including highlight roll off) and color science are the most important things” – first of all, where are you getting that information? Do you know most DPs? Do you actually understand highlight roll-off and color science? Your own video pushing this log shooting app for an iPhone shoots down everything you’re saying! Here, take a closer look at some more stuff from the video:

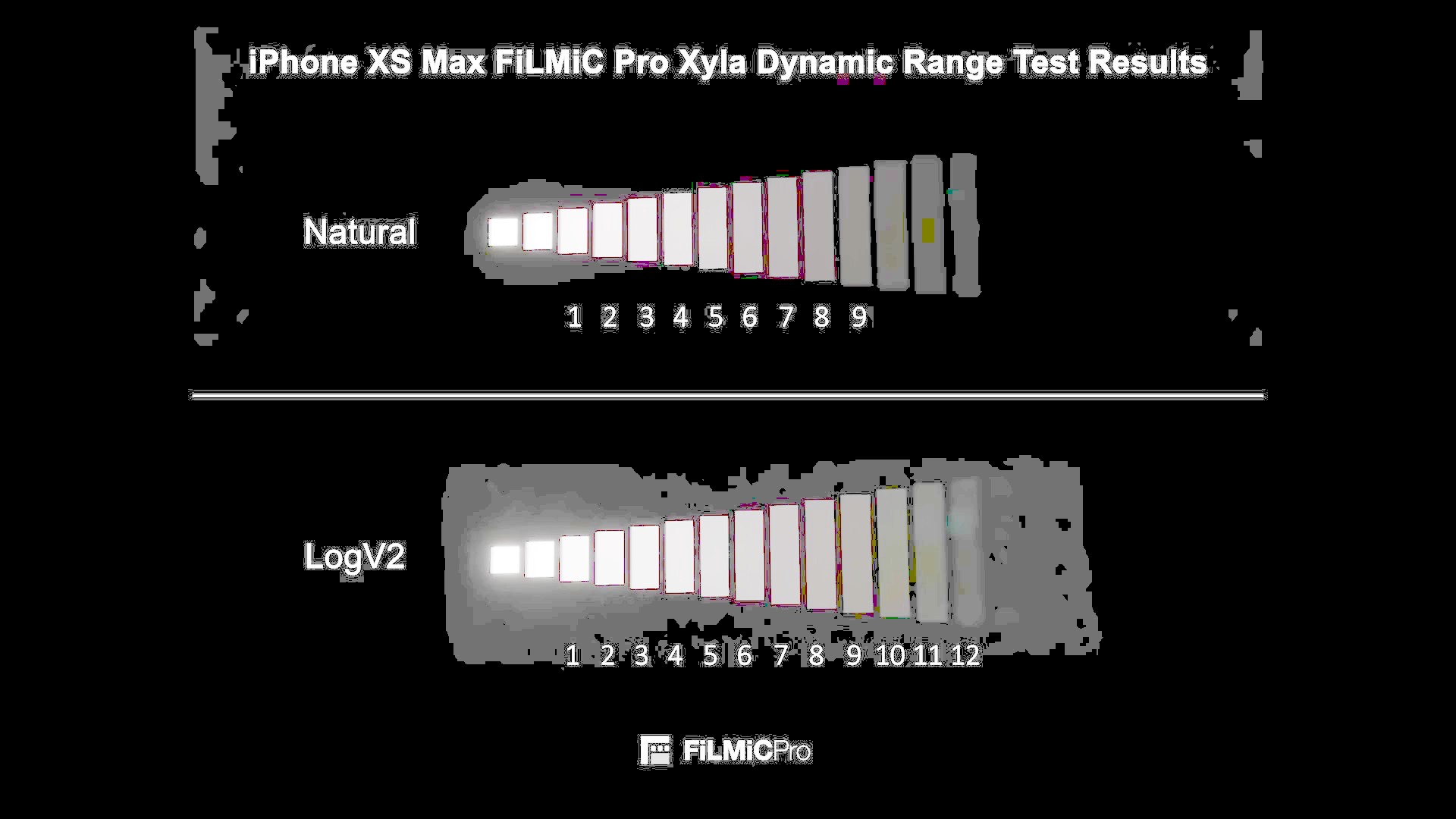

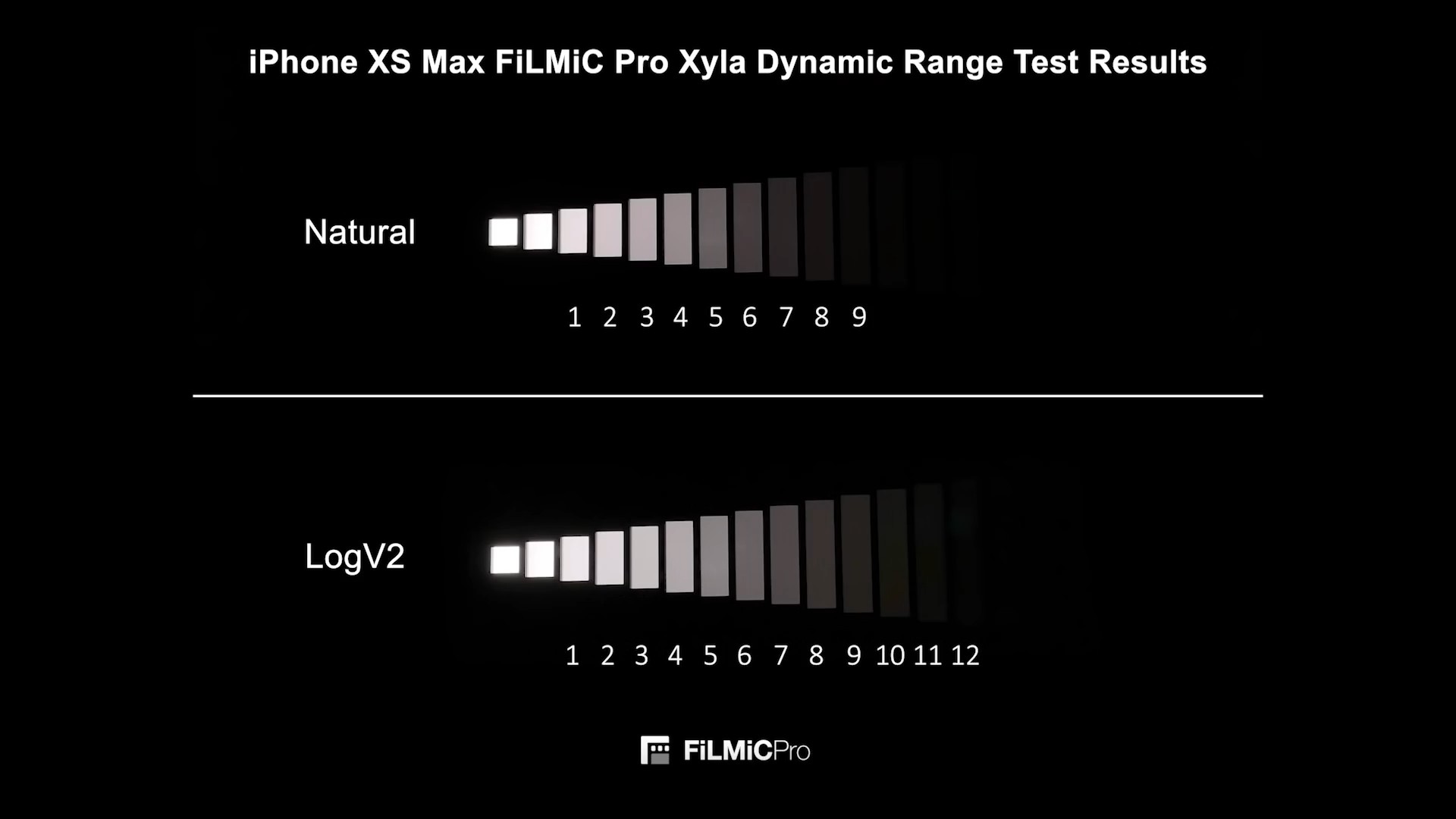

I can see 10 and 11 in “Natural.” They won’t show on a limited range HDMI display due to black levels 0-15 clipping, but they’re DEFINITELY there. Leaving 10, 11, 12 off is intentionally deceptive. Flat styles give you 0.5 stop more dynamic range at best, and their “proof” of 2.5 stops more DR proves them wrong.

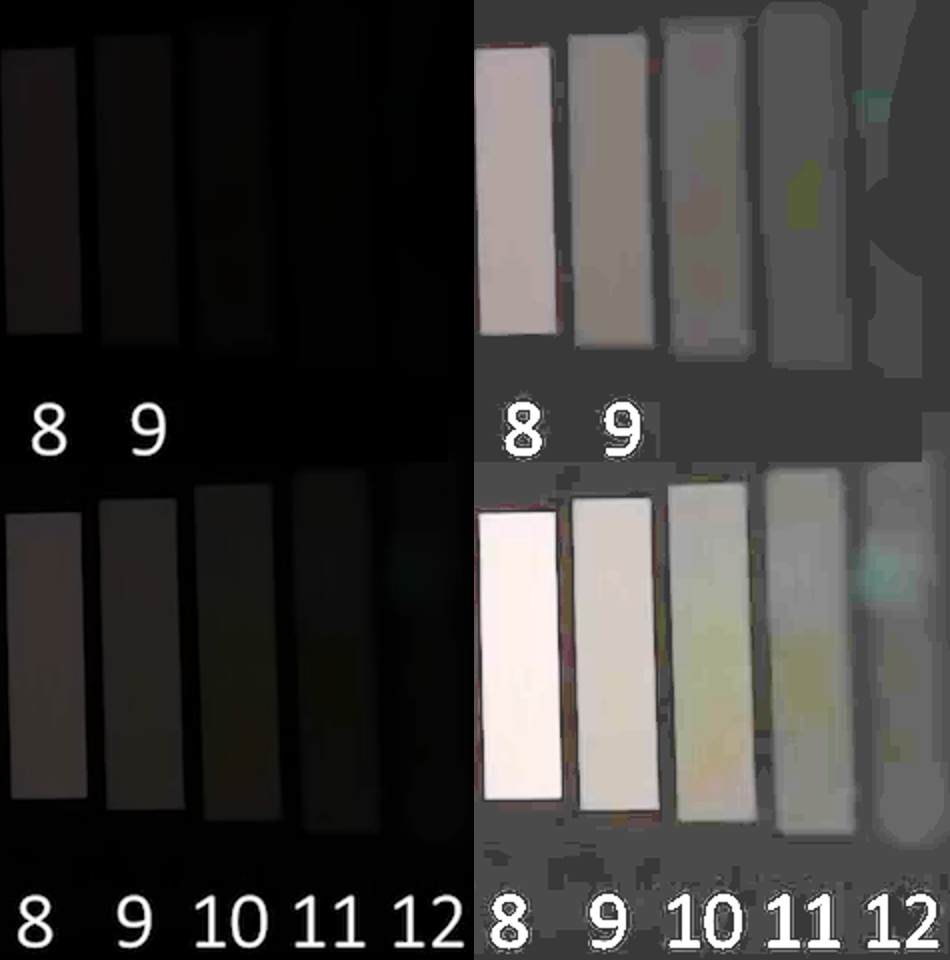

This is my favorite test: use Levels in GIMP to crank up contrast, brightness, and bring shadows into midtones. Here, the truth comes into full view. On the left is a 2x zoom of the right side of the FiLMiC PRO LogV2 Xyla dynamic range test chart above, shown exactly as it came from the YouTube video, while the right is my heavily boosted version that pushes the data to its limits and shamelessly reveals those limits. There are some major things that stand out here:

- “Natural” has non-clipped black data all the way to the “12” slot, so Natural has 12 stops of dynamic range despite the misdirection that it’s only 9 stops.

- The log image on the bottom has brightened shadows, but even at number 8, it’s falling apart. The full damage of dynamic range compression caused by 8-bit log footage is obvious: the “natural” stop bars have more consistent (and therefore useful) color down to at least stop 9 and possibly even stop 10, but the log footage already has inconsistent color at 8 and by 10 it’s jumping all over the place. Even down to 11, the “natural” setting has more consistent and desirable behavior when pushed than the log one does.

- At stop 12, there’s basically zero useful information in either profile other than “clipped black” or “one value above clipped black.” Despite this, the log footage is clearly a worse choice at stop 12: it’s so noisy and so mangled by the H.264 AVC compression’s macroblocks that it’s actually revealing garbage data as if it’s legitimate. This is why flat/log profiles on 8-bit cameras (and even to some extent on ALL cameras) don’t actually help: the lowest blacks are full of noise and those profiles result in amplification of the noise.

- It’s not part of the stop bars, but…do you see how the solid black background around the bars is a dark grey on “natural” and a more medium grey on the log profile? It’s one thing to show near-black as a crumbling noisy region that’s almost completely invisible, but the total-black blacks being brighter on the log profile shows that it doesn’t even use all the bits appropriately all the way down to solid black. It’s wasting bandwidth, so to speak.

That by itself is pretty damning evidence against the log profile they’re marketing, but I got really curious after calling out the missing “10 11 12” and I wondered: “is there anything PAST 12 that just can’t be seen with the naked eye?” I popped the capture of the Xyla chart back open in IrfanView and did a gamma correction as high as it would go to see what would happen. Remember my assertion that you only gain 1/2 stop max of dynamic range by using log profiles, the one that they denied by saying they got 2.5 stops more DR instead of 0.5? Remember that as you look at the following image.

Half a stop later, we’ve seen the light

That’s right! There are some tiny bits of light squeaking through the non-numbered stop #13 on the log profile but not on the “natural” one. It’s clearly not enough to usefully pass through the full stop difference between 12 and 13, but it’s squeaking by in tiny amounts anyway, so that’s probably 1/2 stop of light. Gee, where have I heard “log footage only gives you a maximum of 1/2 stop of added dynamic range” before? Well, I’ll be honest: I originally got all the answers I’d ever want on this subject from an amazing and detailed article on flat/log picture profiles that pulled very few punches when it comes to explanations; I HIGHLY recommend reading it and looking at the excellent graphs and demo images to get a comprehensive understanding of what goes on behind the lens with flatter picture profiles.

While you’re still looking at the image above, take special notice of what the grey area that is supposed to be black looks like. There is inevitably going to be a little bit of light spill from the heavy white side and it’s supposed to taper off as the darkness of the stops increases because that’s just how light works. The “natural” black area has a nice even circle around the brightest stops and it tapers off nicely as it slowly approaches 4. Contrast (pun intended) this with the log profile and you’ll notice that the log profile’s not-quite-black area looks horrible. Not only does it look over-exposed, but there are quality issues all over the place! The smudgy black holes are from compression artifacts being amplified as hard as they can be amplified. It sort of looks like this fancy log profile is mostly just exposing everything higher from the outset . I’ll let you decide what’s going on, but I’m going to say right now that the “natural” profile is the clear winner in this petty little fight.

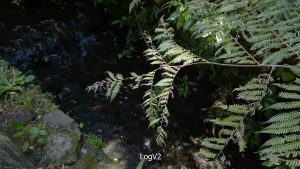

But wait! We’re not done yet! I’ve completely blown the “FiLMiC PRO LogV2” video in question out of the water, yet I have a few more images to show you to seal the deal, plus my response to their response. Here’s a set of images they displayed of a creek in “natural,” LogV2, and LogV2 with a LUT applied to grade it. Look at these images and pay special attention to differences between them:

If you clicked through the three full images and really paid attention, you probably noticed the same thing I did: the sunlight accent on the left side has been all but lost in the log footage! Not only that, but the stream, ground, and glare on the leaves have been brightened so much that the image has lost any sense of depth it had in the “natural” version. It’s…boring and flat now. The log footage has more shadow detail, but the leaves betray the real reason: the log footage was exposed higher than the “natural” footage. It’s not better because it was shot in a log profile, it’s better because the competition was improperly exposed!

My response to their response

Me: 4:2:2 is chroma subsampling and isn’t really an issue with 8-bit/10-bit compared to compressed color curves. How did you determine that you’re getting 2.5 stops of added dynamic range? What was the dynamic range before? Is the dynamic range in question absolute dynamic range or is it usable dynamic range? The video implies that it’s a guess.

I have looked closely at your 1080p ungraded/graded footage in this video (particularly around 4:15) and I find that there are some serious issues with it; ironically, they’re in the places your arrows point: the noise and macroblock compression banding in the blacks before and after grading are unacceptable to me, as is the highlight banding on the ballerina’s right side and the blocky “plastic” look on her face where a smooth gradient should be. The footage immediately after that’s shot “natural” looks drastically better, though the skin tones need to be a bit desaturated. That’s the thing: it’s 100x better to remove that color difference information in post than to throw it away for more dynamic range and be unable to restore it without heavy secondary corrections. Anyone who wants to confirm what I’m saying can skip between 4:15, 4:20, and 4:35 and decide for themselves.

At 1:45, the natural shot clearly has better color, more detail, and less glare, but it was also underexposed relative to the log footage; since it’s not a high-contrast scene, you could have easily exposed the natural footage a bit higher to retain more of the stream detail. Also notice on the left at 1:35, you’ve got a shining bit of sunlight; that shining bit of sunlight is largely lost after the log grade is applied at 1:41; in fact, the lowered contrast is less attractive because there is no visual depth.

At 8:45, the doll image from the phone is more washed out and obviously lacking in fine detail, most noticeable in the eye detail and in the sharpness of the stripes on the dress. The color lacks a punch that’s visible on the right. I am aware that some of this is because it’s a phone. The results aren’t bad for a phone, but the results are nowhere near equivalent. In the end, I suppose what matters is that the person watching the finished product likes it, so do what works for you. I like a healthy dose of contrast and color, but the washed out desaturated look seems to be all the rage these days, so who am I to argue with the trend?

What should you take from all of this?

tl;dr: Don’t shoot flat without 10-bit color and a professional-grade workflow or you’re trashing your footage, regardless of what some “expert” on YouTube says. It doesn’t look like film, it just looks like amateur hour.

Supplemental information about dynamic range measurement with Xyla charts: Is it really possible to measure a camera’s dynamic range?

Looks like some drooling guy is trying his best to prevent people from shooting good videos with phones.What went wrong buddy ? mad because some phone kid shots better than your dlsr ?

funny how you take videos with just a LUT thrown on them.

I’m trying to prevent people from shooting garbage videos with phones. Bolt-ons that apply log gamma curves to phones that produce 8-bit compressed output and already have a lot of imaging limitations due to the phone form factor do nothing but wreck the footage that gets shot with them. I wouldn’t be “mad that some phone kid’s shots are better than my DSLR,” I’d want that phone kid to tell everyone how they did it so that more people could do it. I think it’s quite admirable when people take a limited piece of equipment and squeeze as much value out of it as they can; that’s the spirit of a true hacker, and something close to my own heart.

I don’t know where you got this notion that I’m hating on people using phones. What I’m hating on is those who sell the phone camera equivalent of snake oil to budding phone cinematographers that actually worsens the condition of their footage. If I am bitter about anything, it’s all the “experts” that tell you to do inappropriate things with your hardware that mislead you into thinking that they’re professionals recommending pro tools and that if your footage is wrong when using those “pro tools,” you’re doing something wrong. Of course, you probably didn’t watch my videos about not shooting flat or about how flat, log, and LUTs all suck (which is strange, considering it’s literally the first thing you’ll see a link to on this article), but I guess I can’t expect you to do research before you try to blast someone that bothered you with an article on the internet.

Now I’m going to put you on blast. You accused me of “taking videos with just a LUT thrown on them.” Sorry, bud, but I don’t use LUTs. I get all of my shots right in-camera, using the standard picture profile of the camera. These days, the vast majority of my own videos that don’t explicitly require color-change effects don’t even get a color corrector effect on the video at all because they’re already lit, exposed, focused, and white balanced properly from the start, and I don’t use flat settings or alternative gamma curves when I shoot.

What’s your real problem? Why did this article make you angry enough to leave this comment?

This is TRUE! 8 bit needs to be in Rec .709

Well said.

As an amateur hobbyist trying to learn and shoot better images

I have found too much misinformation on the web. Most of which

i feel is people not wanting to question themselves or the people they get their information from. Too many will defend a position rather than check the data its based on, if there was any to begin

with.

As for “Getting it right the first time” in camera is solid advice

for any skill level. Fixing it in post only goes so far.

Currently experimenting with Magic Lantern Dry OS on Canon

50D and 70D using a 10/12 bit raw app.

Hey, just want to say “thank you” for writing such a great article. As a director at an ad agency, I run into this conversation all. the. time. So many young video “pros” shooting 8-bit log and spouting dynamic range and color grading benefits. Funny how they spend more time trying to “correct” their log footage and getting worse results than they would straight out of camera. But, I was one of them! Then I said, “wait a minute, this doesn’t look better.”

There’s a LOT of misinformation out there, from “pros” (who often have never worked for a production house), mixed with a change in taste…a lot of my clients like the flat look, and request flat footage. They actually LIKE the flat look, and use it, ungraded, in their videos! I digress…

You can’t really control what the client wants, and as long as you’re getting paid, it’s their peril and their problem! The hardware store doesn’t care if I’m using the screwdriver to hammer nails, they’ll sell it to me anyway. I appreciate your feedback and I’m glad to have your support for what I’m trying to say. I tried to be fair about where flat or log shooting IS acceptable, but I feel like most people who are angry that I’m speaking contrary to what their video gods told them never actually read far enough to see that attempt at a balanced presentation.

Informative article. This makes me question the intended target audience for videos like the one iPhoneographers posted – it’s a simple format that seems like it’s for the casual hobbyist but discusses a feature that should be used carefully by professionals. I wouldn’t say they in particular are peddling anything, as they do have LUTS on their website, but they are free and as far as I know don’t receive any kickbacks from Filmic for any purchases of the cinematographer’s kit.

I was wondering if you had a chance to check out Richard Lackey’s Dubai footage. It’s shot on the iPhone 11 Pro using Filmic Log V2 and looks fantastic. He also uses a diffuse filter, variable ND, and an X-Rite color chart, then uses a custom transform to map the log footage to Arri’s Log C before returning to Rec709. I noticed this article mentioned most log profiles squeezing the sensor data into the 8-bit container with obvious downsides and increased noise that steals bandwidth, but didn’t mention that the Filmic Log doesn’t simply squeeze the data before encoding. Unlike Technicolor’s Cinestyle for Canon DSLR’s, Filmic did release a white paper. It takes the sensor data and remaps it in a 10-bit environment, generating completely new pixels, before encoding back in the 8-bit container. They also process the luminance and chrominance separately, if that makes a difference (I’m an amateur myself, so not sure). I feel the general rule about getting it right in camera is best, considering log can be a fragile crutch. But after seeing the white paper, Richard Lackey’s work, and experimenting with it myself using a color chart, I can’t fully get behind the the idea that it’s a waste. It definitely can help if it’s done right, even if it isn’t adding 2.5 stops. I’m pretty sure the iPhoneographers were comparing the Rec709 natural footage to the log footage, which isn’t a fair comparison as once corrected does only add about half of a stop. However, according to the article you linked, this can increase the amount of *usable* detail by more than half a stop. Let me know what you think.

I don’t have an iPhone, FiLMiC Pro, or any of the other things mentioned that are required to try to replicate his process. A lot of what I’m discussing is based on math rather than anecdotal experience or examining specific work products (though I have obviously done my fair share of testing regardless of the numbers). I strongly prefer to keep the discussion in mathematical terms for two reasons: math explains why mismatched raw/final gamma curves with raw precision <= final precision are a bad idea, and math is not subjective.

None of the intermediate stuff that you described actually matters. In the end, it's all just shifting values according to equations, and the 8-bit raw footage format is the mathematical bottleneck. Nothing done prior to 8-bit quantization will "pack more data" into that 8-bit depth space. It's a matter of what data ends up in that space, not how much. A 12-bit sensor capture being quantized to 8 bits isn’t technically any different from a 12-bit sensor capture being quantized to 10 bits and then the 10-bit data being quantized to 8 bits. All that matters is how close the 8-bit output comes to the desired result, and any deviation means correction, and any correction technically equals a loss of quality; more correction results in more quantization, and while some editing programs may perform interpolation to reduce the effects (I don’t actually know if they do this, but I would imagine they do) it won’t fully remove the quantization errors (aka “damage”).

There is obviously a LOT of merit to making changes in the higher bit depth space before quantization; that’s why picture profiles exist in the first place, and why custom picture profiles at least potentially can result in a superior product. If you could create a picture profile that caused the camera to output the final desired look in the raw footage, that would simply be perfect…and yes, if the camera supports loading custom picture profiles, you just might be able to do that if you really wanted to. In the real world, however, we often don’t know the particular look we’re going for until we’re editing; in fact, the look often changes several times during editing, if my experience is any indication. If you shot in a picture profile with a blue cast and a higher-contrast look but then decided you wanted a yellow cast and a lower-contrast look, what once was a big help suddenly becomes extra trouble, thus why almost all footage is shot in some sort of relatively neutral picture profile and color-corrected and then color-graded in post-production.

Regarding the whole “more stops” question, the problem becomes far more subjective, but I have to explain gamma curves in general before digging into that point. 8-bit depth is inherently limited, and with a linear gamma “curve,” an 8-bit luminance channel can only store 8 bits of dynamic range by definition (each bit represents a doubling of the value, thus each would be one “stop” of data). Obviously, having 2 values for near-black shadows and 128 values for near-white highlights is horribly lopsided and a waste of space for the purposes for which we use video. The standard gamma curve used by practically everything with a screen these days “pack more data” into those bits (yes, it sounds like I’m contradicting myself, but bear with me) and it does so by distributing the data differently. To do this, you must have more data than the final output format, which is a big reason why raw sensor data has much higher bit depth than the output file: to map 12-bit linear values into an 8-bit space based on a particular gamma curve, you’ll need to measure with higher precision, especially in the dark areas. (Read the Cambridge in Colour article about gamma correction for a very good explanation with excellent graphics.)

If you wanted to represent 12 stops of dynamic range across 8 bits with roughly equal levels of detail (curves aren’t even but this is a simplistic illustration of the concept), you’d need to store (2 ^ 8) / 12 = 13 individual values per stop. You’ll notice that these numbers are suspiciously close to the 11-12 stops of dynamic range that most digital cameras get at base ISO, and that’s not without good reason. What happens with log curves (and arguably with “flattening” which is really a crude attempt to do the same thing) is that the distribution of values used to map the sensor data to the output data is shifted; typically, the highlights and shadows are given more values while midtones are given less values. This means more of the near-blown or near-crushed areas have more precision while the “normal” areas have less precision. If you are shooting a high-contrast scene such as the building shots in the video you referenced and you want to reduce the contrast in post-production, it actually makes sense to use a curve that allocates more values to the extremes. Yes, the shots in that video generally look pretty good, but most of that comes down to the skill of the shooter, not the use of log gamma. In fact, if you look at the color in the images, you’ll notice that there is both an unnatural pastel-ish tone and the images have low contrast. I’m going to guess that this was an intentional choice to reduce the effects of converting the log gamma back to standard gamma completely. I took the liberty of pulling a frame from the Richard Lackey Dubai video into IrfanView and stretching the contrast out to a more normal range so you can see what happens to the histogram and image when you pull it back out to a normal level of contrast. In that particular shot, I’d say that the reduced contrast works to some extent because of the windows and the brightly lit wall, particularly because both have visually interesting detail. I personally prefer a contrast-boosted and slightly less saturated version of that final shot. Had there been a person in the shot, there is a very good chance that their skin would look “plastic” and would not be recoverable, but Richard chose to shoot non-animated mostly-artificial objects lit by daylight, which are probably the most compelling candidates for the use of log gamma. Indoor scenes or scenes with humans tend to fare poorly when you’re throwing away midtone precision to boost shadow/highlight precision. Skin tones in particular are mostly in those stripped-away midtones, with a few very dark or very pale exceptions.

Thus, we’ve come full circle: in the end, images are art, and art is subjective. Yes, you can sometimes produce good images from log gamma footage on an 8-bit camera, and high-contrast scenes in particular could ultimately end up looking better, but “better” is a personal aesthetic judgment rather than a technical term. Some people think a washed-out low-contrast image is “more filmic” and “like watching it in a theater” while I will probably think such an image looks like grey hazy crap because the silver screen having lower contrast is a bug, not a feature. Who is wrong? I mean, I can rant all day long about how contrast is how humans survived in nature for tens of thousands of years and we’re hard-wired to find differences in contrast particularly stimulating, but if the guy who likes washed-out garbage enjoys it, why should he care about any of my babbling? He likes it and that’s all he cares about.

If you think about it, neither I nor you nor him nor her care about things like “stops” and “dynamic range.” We want to look at things we find visually pleasing. An extra stop of dynamic range might do that for a high-contrast shot of Dubai’s buildings in the sunset, but the sacrifices made to get that stop might ruin a shot of friends talking at a table in a restaurant. With all of that being out of the way, I fall back to the technical arguments. Our eyes like contrast and are more perceptive of midtone detail, so the standard gamma curve is well-tuned to what our eyes want. Shifting in post from log gamma to standard gamma with 8-bit input and output files results in loss of midtone detail that our eyes will not like losing and discarding the added shadow/highlight detail that the log gamma is meant to preserve, while trying to stick closer to log gamma by leaving the contrast reduced gives the entire image an unattractive “washed out” look. As a general rule, log gamma with 8-bit output files is a bad idea, and the small potential benefits in high-contrast scenes probably aren’t worth the added workflow and reduced elasticity of the raw footage.

Sorry for the wall of text. I may have gotten carried away.

Excellent response, thank you. You’ve changed on mind on the religion of only using log in any scenario. Lower contrast scenes (especially with actors) should be shot with the standard profile and that seems to be lost on fanatics, myself included.

I would like to add that while there is no denying that mathematically there is damage done to the data when it is stretched too thin across the gamut, I think we can agree that not all damage is equal. Some types of damage can be nearly imperceptible, even to professionals, and that’s before we consider subtle uses of debanding, adding mid-tone detail, and denoising (and there are some great plugins that don’t take away from the detail we like or look too artificial). A degradation in chromatic fidelity by shooting log would have a lesser impact on sometime shooting for B&W. Trading some mid-tone detail for more shadow/highlight detail might enhance a scene where the focus of the shot is purposefully under/overexposed.

I guess my only suggestion left would be to consider an alternate title: “YouTube video experts don’t understand *when* flat/log footage on 8-bit cameras is a bad idea.” It sounds like it could be more than half the time that it’s a bad idea. I’m just a fan of people pushing popular, limited hardware (like 8-bit 4:2:0 footage) to its limit, which would also explain my fascination with the Raspberry Pi computers and its countless applications.

Thank you again for the thoughtful response.

It’s true that damage doesn’t matter until it affects the quality of the apparent image. That being said, the reason that I blanket advocate against shooting log/flat on 8-bit is that it is very sketchy when it comes to using it properly. Deciding if a scene could benefit from it is difficult as-is (does the shadow or highlight detail actually matter that much?) and getting the wrong answer has the potential be very costly, up to and including compromising a no-redo shot. Then there is the precision of actually shooting it. I commented on the video you mentioned to me and Richard Lackey confirmed this in his reply: to get what he got, he had to be extremely careful and shoot in a very particular way, and his exact statement on shooting 8-bit log was “it’s a minefield.” The risk/reward ratio is pretty poor, so most people should not even try.

That being said, Richard Lackey’s results speak for themselves. He clearly put a ton of effort into mastering that narrow path where you get the benefits. If someone wants to learn that path, I say go for it, but they need to know where the edges of that path are before trusting footage of any worth to 8-bit log. My intended audience is amateur filmmakers more so than seasoned filmmakers, and my assumption is that the vast majority of people who would buy FiLMiC Pro will be somewhere closer to “amateur” than to “seasoned.” If I were trying to speak to seasoned filmmakers, I’d probably change my approach to suit. My goal with this post and posts/videos like it is to prevent people who are not seasoned from buying into hype that has a serious negative payoff for them. It hampered my progress at the beginning, and I wasted a lot of time because of it. I don’t want people to repeat my mistakes. At the same time, people who know what they’re doing and want to add more difficult high-end skills to their already large skill set should feel free to try it out and figure out if it holds any value for them (thus my mantra “test, don’t trust”).

I’m also a fan of pushing things to or past their perceived limits, and I appreciate that drive. At the same time, to use a computer analogy, someone who just started coding probably should not be attempting to implement their own C compiler and is almost guaranteed to fail to even get a simple recursive-descent parser working. (By the way, here’s my recursive-descent parser: https://github.com/jbruchon/jodycalc)

I appreciate all of the thoughtful discussion you’ve brought to the table here, and for showing me Richard’s work. I may do a follow-up article to clarify the things we’ve discussed. It seems like an appropriate next step, don’t you think?

I agree 100%. I recorded a short clip last night on my iPhone 7 using the natural setting with a color chart and I verified it was much easier and faster to correct and get a clean final image with great range and proper contrast. I also noticed that switching to log after getting proper exposure in the natural setting does require stopping down by 1/2 to get similar exposure with a gray card, although the app wanted to expose higher by about 1 stop from the natural exposure.

I dabbled in BASIC and Python (HTML was a bit boring) for a bit before deciding I should try C++. I bit off way more than I could chew and decided it was better to take it slow and have fun with Python and little OpenGL demos for now. I’ll check out your parser after work with my buddy who has a degree in computer science.

A follow up article would be pretty cool. You can email me at jakemandujano@gmail.com if you’d like and I can supply some example shots from my current phone (and maybe soon an 11 Pro) comparing natural and log 4k grabs from Resolve, both corrected and uncorrected. The only problem I’ve been having is with the green and magenta chips on my Datacolor SpyderCheckr 24 (green tends to be very desaturated and magenta behaves better if I pick a hue point halfway between magenta and red), but maybe you could correct it instead.

Thanks for the response.

Hello, I don’t know if you’d like to know there are finally the first capable app to shoot 10-bit color depth video choosing and setting a logarithmic curve (at sensor level !) with manual controls.

mcpro24fps app (only for Android)

https://play.google.com/store/apps/details?id=lv.mcprotector.mcpro24fps&hl=es_419

(10-bit profile and colour space outputs depends of OEMs implementation). I’m not the developer, I am just a happy customer 😉

Good article, anyway. Maths are important to have in mind, but art is the reason why we do videos, and sometimes last one can be over first. 😉

Great read! The reason why I’m steering away from Cinestyle for most shooting on my Canon 90D.

Have you looked into VisionColor/VisionTech? They offer a color profile that is very careful to not do what you are describing:

“ By transforming linear RAW sensor data to a logarithmic curve and thereby further compressing the range to even less values, all tonal gradation in the 8-bit coding space is sacrificed.

Chroma banding and severe image degradation are often the result when color grading logarithmically encoded 8-bit comressed footage back to a regular Rec709 or sRGB viewing gamma.”

They skirt all the negative issues you’ve presented while still giving a slightly flatter image with really nice colors I find pleasing. They essentially have created a slightly enhanced version of the neutral profile in canon with just enough flatness for my use.

Look at this page and go down to their technical specifications and see what you think. A lot of people swear by them with similar concerns to your own. I think I feel pretty confident using it.

https://vision-color.com/products/picture-styles-canon-eos/

I probably will not bother with it because I don’t see the value in these picture profiles relative to the effort to add and post-process them. I shoot Standard on all my gear because it looks good straight out of the camera and I am generally shooting stuff that I either want to churn back out quickly or will shovel into a stock footage folder and possibly never share. In either case, it’s easier to have stuff that can be either uploaded as-is or that doesn’t require work beyond straight editing. I also just don’t feel like loading picture profiles into my cameras in the first place. The best DR boost that I’ve ever seen anyone get out of any flat/log profiles is 1/2 stop, and the loss of color fidelity needed to get that is just not worth all of the trouble. I think it’s too easy to get stuck in technical quagmires like agonizing over picture profiles when that time could be better spent doing almost anything else.

I also moved from Canon to Panasonic when I got a Panasonic G7 and realized that Panasonic’s cheaper camera had tons of “pro” video features for a much lower price. Canon cripples their cameras and I refuse to buy them anymore because of it. They started the DSLR filmmaking revolution, but other manufacturers have long since blown them out of the water. The holy grail of DSLR filmmaking today is the Panasonic GH5S and it’s so much cheaper than the 5Dmk4 that you can get a pro lens for the cost of the 5D body alone.

Thank you so much for your lengthy article and insight, ive learned a lot from it. From what i gather you shoot strictly with no PP at all. Am I correct to assume that? I also like your comment about panasonic cameras. I personally haven’t used canon cameras but do run a sony fs5 and a7s. Have you tried sony at all or are you planning on continuing to use panasonic?

Again, thanks for this great article. I will for sure be testing all these things out and will probably end up shooting natural from now on.

Only question I haven’t been able to answer is matching color for different cameras. Every camera has a different look and matching color between sonys and canons or arris is already difficult in log profiles. What do you suggest doing in such a situation?

If by PP you mean post-production then no, that’s not correct. I shoot to minimize the need to do image corrections but I don’t expect to eliminate it entirely. Most of the time I still boost saturation, slightly sharpen, and tweak the contrast/shadows/highlights in my editor to taste, but my goal is for most of the things I shoot would still look good without corrections. I always expose to the right (ETTR) but tweak it for the best look on subject(s), not the raw histogram, so some of my footage does look a little bright or dark to increase my potential for image correction later, but not unacceptably so (so it still looks good without).

I have used several Sony cameras, primarily camcorders. I have not used their large-sensor cameras but every comparison video I’ve seen between Sony bodies and Panasonic bodies is full of Sony fanboys in the comments declaring how clearly Sony beats the crap out of Panasonic, yet I look at the same side-by-sides and don’t see any reasonable path they could have taken to come to that conclusion. This is the video that was in my mind when I typed that. Set the resolution on the video to 2160p, go to 0:47, pause it, and full-screen the video. Look at the wall texture and the lines on the suit. The Sony appears to have better noise performance (the door suffers because of this) yet the Panasonic GH5S obviously resolves a lot more detail than the Sony a7S II. This is because Sony’s “good noise performance” is supposed to come from having 4x the surface area of the GH5S sensor, but it’s really because all Sony cameras seem to use very aggressive noise reduction which permanently discards detail from the output image. In fact, you’ve inspired me to finally point the differences out in an image that you can look over for yourself (click for the full 2560×2880 image):

If you need to match between cameras: set every camera to the “neutral” picture profile (some cams call it “natural”), crank noise reduction all the way down (off) if possible, and hold up a color checker card. Some editors have the ability to automatically match footage based on the color checker card. No method is perfect, of course, but this will make most matching tasks a lot easier. Canon is especially notorious for boosting reds within certain color tones to try to make skin and red objects look better, but this has the unfortunate side effect of making other things look too red; a neutral/natural profile shuts this kind of “portrait” coloring off, leaving you free to define curves and colors in your editor to suit your specific needs without baked-in biases. If you can, it’s best to just use the same brand and type of camera all around. Mixing camera types will always make things more difficult. (Sadly, I say this from experience.)

Hi, thanks for your article. I’m super green, kinda exactly who your article is intended for. I’m wondering how much/if this changes now that the iPhone can capture 10 bit colour? If you can shoot (for eg) in Filmic Pro accessing the iPhone Dolby Vision/HDR capability does it then start become less of a ‘minefield’ to use log or flat profiles? Thanks 🙂

If it can actually produce 10-bit output files then there may be enough latitude to use log curves. You’d really need to do some A/B tests to find out. The big problem with phones is that their sensors are small AND their optical systems are limited and represent a pretty tough compromise. There is no way to smash a great lens into such a tiny form factor. If it’s all you have then it’s great to make the most of it, but frankly, you’re better off with a used premium point-and-shoot like a Panasonic LX7 than you are with any iPhone. Remember that things like 10-bit depth and 4K video don’t mean the material being stored has that level of precision, only that the quantized values do. That’s why DxOmark rates lens-camera combinations with a number they call “perceptual megapixels” which is often far lower than the actual sensor megapixels. If the optical system doesn’t pass through sufficient sharpness or color fidelity, all the megapixels and bit depth in the world won’t make the actual visual quality any better.

My mantra is “test, don’t trust.” Shoot the same shot in multiple ways and take it to your editor. See what you can do with each. Push the picture harder than you normally would–crank that contrast, boost the shadows, drop the highlights, and watch to see when banding and blocking hits. Then you’ll know what you are really getting out of it all. You may find that 10-bit on a phone is a waste, or you may steal 1/2-stop of extra dynamic range for “free.”

Thank you for this explanation (and for sticking your neck out). This confirms my own testing that natural curve gives more consistent and more pleasing results than the logs in filmic pro with a color chart on 8-bit iphone 11 and lots of light. (more color to work with, more contrast to work with, better final look). Now I know why and that it wasn’t something I was missing.

P.S. no ssl certificate???

Glad to help. No, I don’t have SSL on most of my websites. My provider doesn’t support Let’s Encrypt and I refuse to pay for a cert for every single website every single year.