DVD Format History

The DVD (digital video disc) format has been around since the 1990s, and while Blu-ray ultimately took over when high-definition video became widely supported, the venerable video DVD is still the go-to format of choice for a lot of purposes. Every Blu-ray player and disc-based game console can play a DVD, not to mention every computer that comes with a DVD drive (most computers built from about 2008 to 2017 come with a DVD burner), and DVD players for televisions have been sold for well over two decades now, so it’s a very reliable way to hand off videos to people of all levels of technical expertise. I still offer DVDs for sale through the Jody Bruchon DVD shop and Redbox is clearly making enough money to stay in business as well.

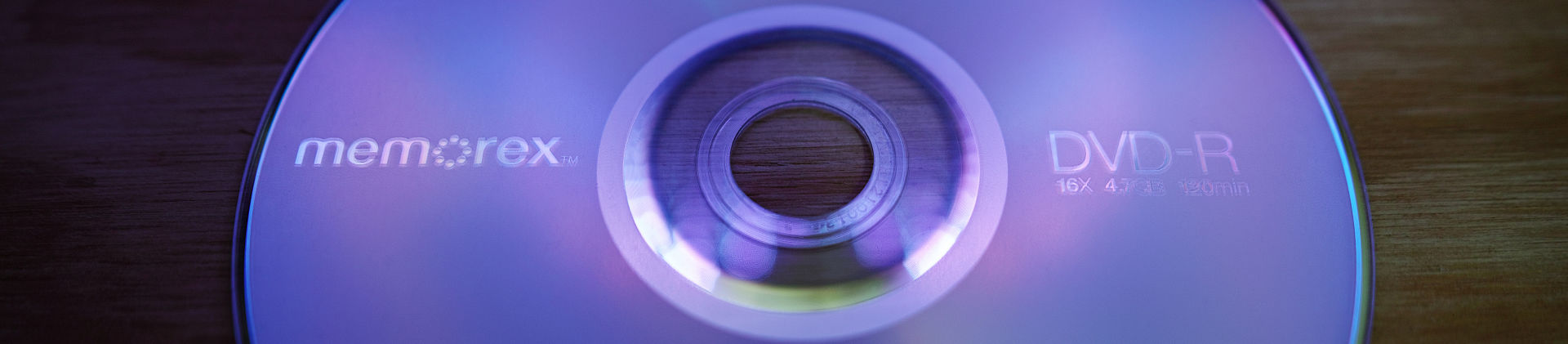

I’ve been creating DVDs for a long time, both personally and for Gazing Cat Productions clients, and I’ve pretty much seen it all. DVD player compatibility is always a major concern, but it’s pretty much impossible to burn a DVD that works with all players. The earliest DVD players came before DVD-R media was available and DVD+R media wasn’t officially added to the DVD specification until 2008. DVD-RW is notoriously troublesome in non-RW drives, too. (If you want a complete overview of DVD recordable formats, Wikipedia has very good summaries.) Suffice it to say that DVD players can be grouchy about certain DVD disc formats, so DVD-R is the only reliable choice…and yet, a small number of players out there won’t even work with that. There are a whole host of pitfalls in the authoring and encoding of the data that actually goes ON those discs, too, which leads us to today’s absurd first-world problem.

The Customer Drops Off

A gentleman brought a DVD to my doorstep. It contained a bunch of JPEG image files that he was trying to use as a slideshow for a person who had passed away, but the DVD player wouldn’t play them properly. I created properly authored video-format DVDs out of the JPEG images and they seemed to play OK on the computer, but because there are hundreds of images, I didn’t watch the whole slideshow; I only confirmed that the DVD auto-plays and loops properly, and a few photos worked OK. The computer seemed to have no problem at all with the DVD media. I gave him the media along with a warning that DVD compatibility can be an issue and that if he has any problems, I want to know.

The Customer Had Problems

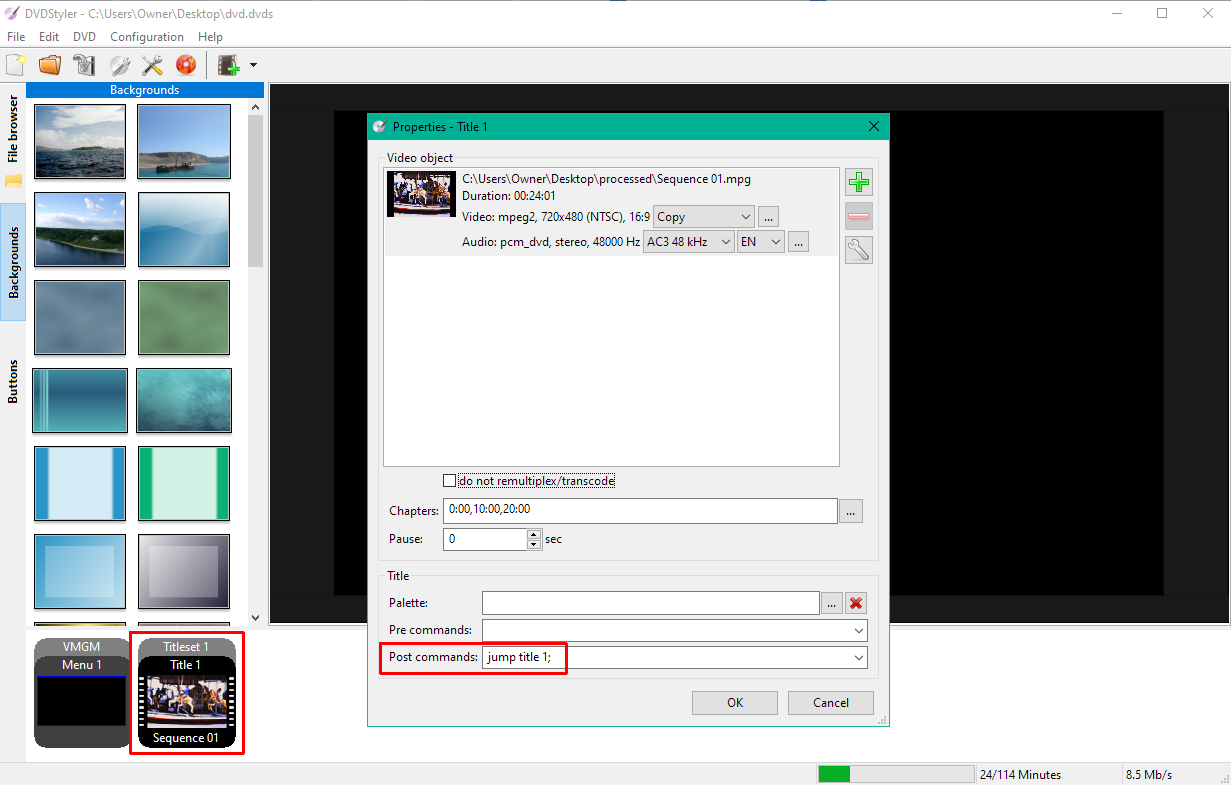

The DVDs worked on his computer as well. His Blu-ray player, however, would loop after only a few photos. I’d never seen anything like this before. If a DVD plays at all on the player, it generally plays exactly as it does on the computer. This was an exception, and it didn’t make any sense. Did I check a box I shouldn’t have? Did I accidentally set a DVD command that I shouldn’t have? Did I use the wrong burning mode? I always run a verification pass after burning, so the data should be fine…right? What has changed?

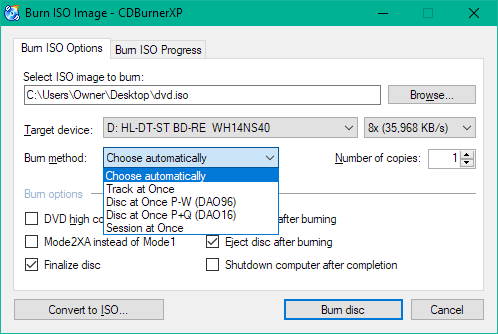

I ran through a bunch of possibilities and achieved the same failure every time. Disc-at-once vs. track-at-once vs. session-at-once: no dice. Burn speed: no dice (and it shouldn’t be a problem anyway). DVD high compatibility mode: no dice. All of these burning options failed to change anything. Re-authoring the DVD also resulted in no change. I was pretty screwed. I thought I had tried everything and it would go down as one of those random things I’d never find an answer about…but then, after the customer visited and wanted a status update and was eager to get their player back, I re-visited the problem and put on my “what have I missed?” troubleshooting cap.

The player itself probably wasn’t the issue. The DVD burning method and options weren’t the issue. The DVD-R format wasn’t the issue. The DVD authoring work I did wasn’t the issue. What’s left?

Something About the Picture Files

I noticed that the thumbnails weren’t loading for some of the pictures, but I figured that’s not a problem because they open up just fine. I decided to make the DVD slideshow video myself in an editor instead of letting the (fantastic and free!) DVD authoring program DVDStyler produce the slideshow for me. That’s when I got an error message that tipped me off to the actual problem (paraphrased): “[4 images]: the dimensions are too large to import.” I looked at the files and noticed that they were the same ones with no thumbnails…and that’s when I opened one and noticed the actual problem.

These images were over 20,000 pixels wide. They were all high-DPI scans of old pictures and the resolution was massive. Several of the others were 12,000 pixels wide. Scaling down the 20,000-pixel images made them import into Premiere Pro, but any attempt to export the images would crash Premiere hard. It quickly became obvious that the extreme resolution of these images was responsible for all of my problems. DVDStyler uses the open source video processor FFmpeg to create the DVD video, including mixing photos into a slideshow video, and that program doesn’t handle such high resolutions very well.

The end result was a DVD video that became corrupt where the huge pictures were supposed to be; the computer players will skip the corruption, but the standalone player owned by the customer would immediately assume that the corruption was the end of the stream, causing it to kick back to the auto-play loop I had set up. This is why the customer was saying that it would play only a few pictures before looping.

Scaling Down This Operation

I used the excellent and free image viewer and simple editor IrfanView to batch resize all of the images so that their “long side” was 1920 pixels maximum, with the option checked to not resize smaller images. This limited all of the images to a resolution that I knew my tools could handle…and that was the end of all my problems! The size of the images being fed to the slideshow maker wasn’t even something I thought of as a possibility until Premiere complained about the pictures. Sometimes the solution to your problem is to walk away and re-examine it from scratch.

How Big Are These Pictures?

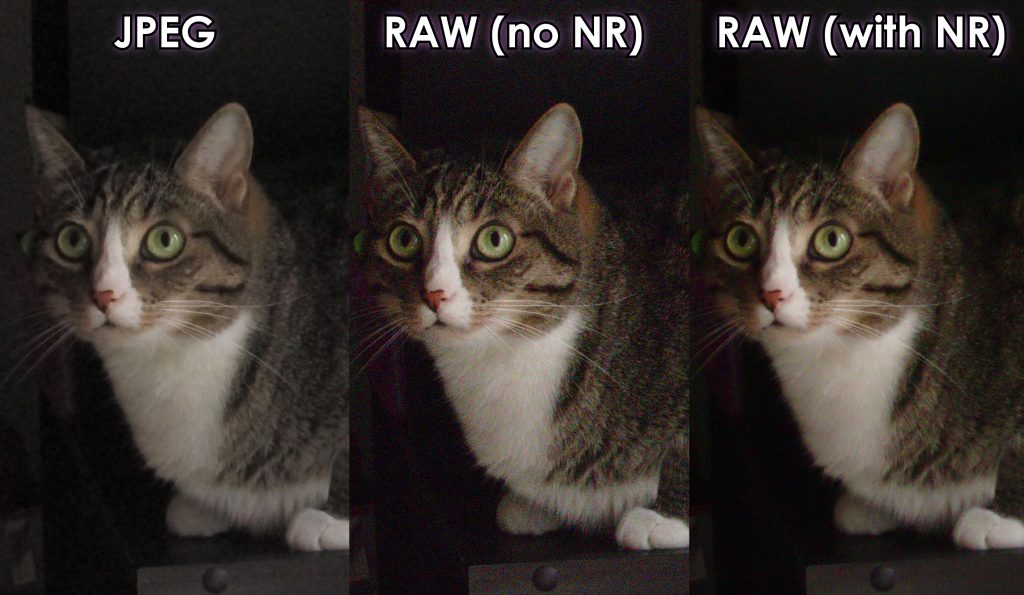

It’s amazing how the limits set in the past can affect the future. To put the colossal size of these images into perspective, the highest-end digital cameras in the early 2000s could only take photographs up to about 4 megapixels. An entry-level DSLR camera in 2010 would have 16 or more megapixels of resolution, and 20MP-24MP was standard for entry-level gear by 2020. High-end monsters like the Fujifilm GFX 100S have reached 100MP and beyond, and this is also where the camera technology of today (2022) meets the images I was given. The resolution of a good photo scanner is higher than that of a typical digital camera, and with cheaper photo scanners offering interpolation (also known as “up-sampling”) to higher resolutions than they physically support, it’s very easy for a casual user to “turn the knobs to 11” in the assumption that they’ll be capturing their photographic memories in the highest quality possible.

The Interpolated DPI is a Lie

Everything beyond the actual resolution of the scanner is fake, created the same way that you’d create it if you dragged the image into an editor and made it a higher resolution (you can’t add detail that doesn’t exist in the original data, only guess at what might have been there). The interpolated image isn’t any better, it’s just much bigger, pretending to be as detailed as a GFX 100S photo when it’s not.

We’re Reaching These Limits Anyway

The resolution limits of Premiere Pro and FFmpeg are quite high. They are unlikely to be encountered by the vast majority of people using those programs, especially since they’re made for working with videos instead of photography. Most video cameras nowadays offer 4K video resolution options, but high-end video cameras exist with up to 8K of video resolution. This sounds like a lot (and make no mistake, it is a lot of pixels) but when you reduce the frame size to a megapixel count, you can start to see why video tools aren’t made to handle such massive images (list sorted by megapixel count):

- Full HD (1080p) video is about 2MP; most common computer monitors, laptops, tablets, phones, and televisions support this resolution or lower

- 4K video is 8.29MP; more expensive digital devices and video cameras support this video resolution and nothing higher

- Entry-level DSLR/MILC cameras are 20MP-24MP, at least 10 times more pixels than your average television or computer or phone or tablet can show

- 8K video is 33.1MP and is the highest resolution offered by any digital video camera I am aware of, with almost no screens supporting this resolution natively at all

- Professional DSLR/MILC cameras get closer to 50MP, leaving the resolution of even 8K video in the dust

- Recent cell phones are 48MP-64MP, but there’s a catch. With the switch from traditional Bayer sensors to quad Bayer sensors, phone megapixel counts are skyrocketing, but they’re also hitting limitations (primarily the limits of diffraction) that mean you don’t actually get 64MP of detail…but the raw number is still high, so here it sits!

- The Phase One IQ4 medium-format camera is 150MP and costs as much as a fancy pickup truck!

This Was Not What They Planned

It’s fairly obvious that while 8K is the pinnacle of video resolution, it’s nothing compared to what you get out of a modern photo camera. It’s not surprising that tools created with 4K in mind and 8K as a maximum don’t like to be fed “12K” images. In fact, the scanned photos that caused me all of this grief reached as high as 20,000 pixels wide (“20K” if you prefer) and there’s no way that these video tools ever considered that as a possibility when they were written…and why would they? Your devices probably can’t show more than Full HD (“2K”) in the first place, so why even consider 20K as a possibility?

Think about the original problem that bought us to all of this crazy discussion: I tried to make a slideshow video on a DVD. It’s not an uncommon task. People do it all the time. FFmpeg and Premiere Pro are both designed to take images and pretend they’re video clips, and they do that quite nicely. People make DVDs with picture slideshows all the time using these tools, and have for well over a decade.

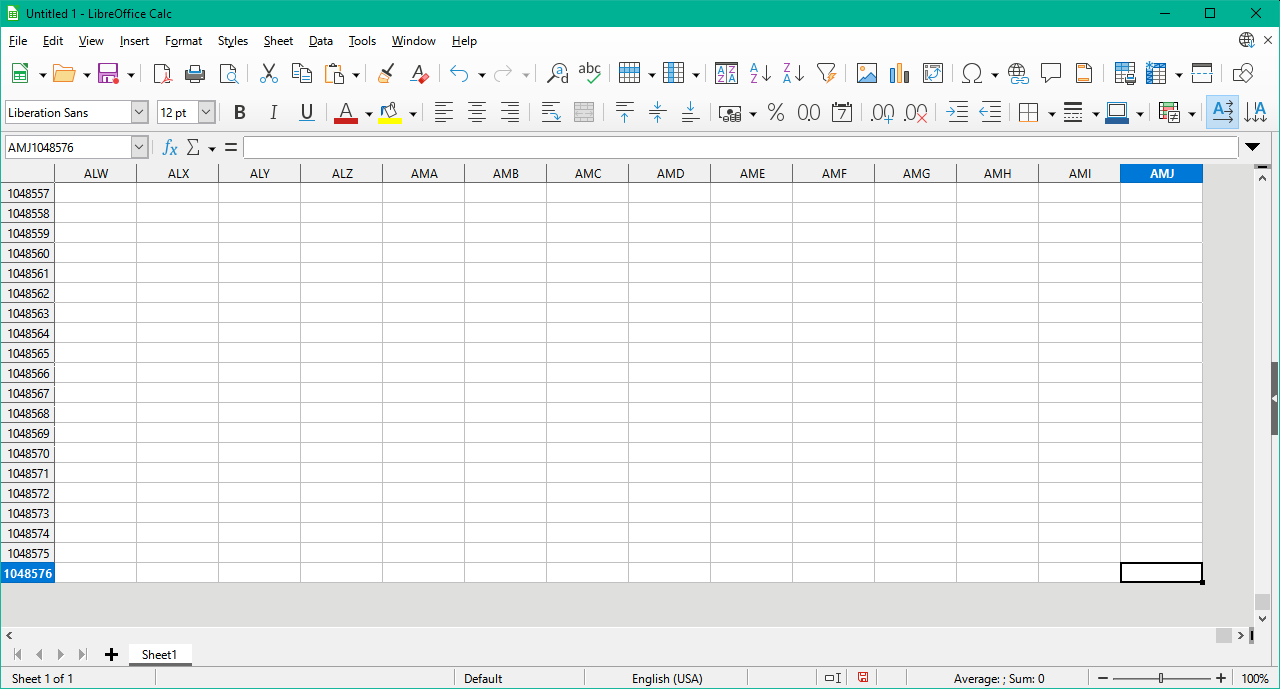

The problem is that our technology evolves and our tools don’t necessarily have to evolve with it until it becomes a real problem. A lot of assumptions are baked into the software that we use on a daily basis and the vast majority of us will never run into the wall that those assumptions put in our way. Years ago, I noticed that LibreOffice Calc would let me scroll down to row 65,535 (the limit of an unsigned 16-bit integer in programming) but I’ve never tried to make a spreadsheet in Calc with more rows than that. Likewise, I don’t have a single photo with a higher resolution than 24 megapixels, so I’m not likely to try to feed a video editor with a photograph I’ve taken that’s any bigger than that. The limits weren’t a problem…until they were. Surely I’m not the first person that’s tried to pull up a dump truck full of pixels to these programs and expect them to take the load gracefully, but at the same time, this scenario is also exceptionally rare.

Should these limits have been put into the software? Should they be removed now that I’ve run into them and they caused me a very real problem and ruined the product I delivered to the customer? The answers are not a simple “yes” or “no.” Software limits are often unavoidable because the hardware that runs it has limits, like the Calc row limit I explained earlier.

Software Limits Are Often Trade-Offs

Software is a constant struggle to maximize speed and efficiency without sacrificing correctness and capability, but when it’s faster to keep track of a counter with a 32-bit unsigned integer and you don’t see why anyone would ever need to count more than 4,294,267,295 for that counter anyway, you’re going to choose that “big” limit, and you won’t care that it might mean 12K video isn’t supported when 4K hasn’t even been created yet. Ten years later, some weirdo tries to feed a 96MP scanned photo image to your program and it chokes, but your more efficient choice still works for the pinnacle of video technology: 8K resolution. This leads into the second question about changing…

Should The Software Change?

It’s hard to fault the authors of the software for not changing to suit the extreme that I tried to force onto it, especially when it makes every other use case faster. The vast majority of digital cameras out there take photos at or below 24 megapixels and 8K video is 33 megapixels. It doesn’t make a lot of sense to hurt the 8K or less use cases just so I can feed unreasonably huge photos into the software on extremely rare occasions. If video continues to creep up in resolution then things might be different, but the value in going from 4K to 8K is not very high as-is, so 12K video is not likely to be a concern for a long time to come. Perhaps they’ll be forced to change the software, but it will probably come at the expense of performance for all of us, as such improvements often do.

Returning to the start (as all good stories do), the correct answer to this crazy exception is to treat it as a crazy exception and work around it. I’d love to just be able to shove pictures at DVDStyler and get what I want out the other end, but I’m one user out of millions in the world that will never run into this problem. I don’t want the program to become slower for this once-in-a-lifetime (I hope) use case. I don’t think DVDStyler or FFmpeg or Premiere Pro should change to make this problem go away.

Or at least, not yet.

When video and images get to the point that it’s a more mainstream issue, it’ll be an appropriate time to change. Until then, I’m happy that I can work around it with IrfanView’s batch processing on the wild chance that I ever have this problem again.

I hope that you’ve found this discussion to be interesting and informative. Feel free to leave your thoughts in the comment section below. Comments are moderated and don’t appear immediately, but I’ll approve them as quickly as I can.